Advaced GitLab Pipelines

Written by Dominik Pantůček on 2025-10-23

CIracketscribblejavascriptSometimes you need to build multiple interdependent artifacts in your CI/CD pipelines. And sometimes you can setup your workflow in a non-blocking way for many of these. Read on to see what we are using in our GitLab instance.

In one of our latest projects we have found ourselves in the need of building multiple components. The project uses React.JS frontend and Racket-driven backend. For both of these platforms there are documentation and testing tools we wanted to integrate into our CI/CD pipeline to ensure smooth development in the long run.

We also wanted to ensure that the pipeline running times do not stretch too much as that could slow down our development pace. Luckily enough, there are some jobs which depend on other jobs results and there are some jobs which do not depend on any.

As we integrate the frontend into the backend, the frontend has to be built first. Only after the frontend has been built, it is possible to build the backend binary. How do we do it? With GitLab CI/CD pipelines it is rather straightforward:

frontend-build:

stage: frontend

needs: []

script:

- cd frontend

- npm install

- CI=false npm run build

artifacts:

paths:

- frontend/dist

- frontend/node_modules

backend-build:

stage: backend

needs:

- job: frontend-build

script:

- raco make app.rkt

- raco exe --orig-exe app.rkt

artifacts:

paths:

- app

- '**/compiled/*'

In our case, job artifacts are not only the ultimate result - actually most of the

time they are not at all - but they serve as intermediate data to be processed in the

following steps down the pipeline. In the above specification, the frontend creates

some files and directories we store and then reuse in the backend build job. Then - and

this is interesting - the backend build job not only stores the resulting binary but

stores all files under compiled/ directories for later use as well.

The very useful part comes from the raco make command which precompiles

all the Racket sources into *.zo files which can be used for other

instrumentation later on. That means that when we build documentation or need to check

test coverage, most of the compilation has already been performed earlier in the

backend build step.

And yes, the very next step is building the backend documentation using our good old friend Scribble. The job specification is simple:

backend-doc:

stage: documentation

needs:

- job: backend-build

- job: frontend-build

script:

- racket tools/render-docs.rkt doc

artifacts:

paths:

- doc/

As it can be expected, the backend documentation job takes the precompiled files and

other dependencies from the frontend and invokes a custom online documentation

renderer. The result is just the usual doc/ directory Scribble produces

which contains a nice, browseable documentation structure.

In the meantime, the frontend uses JSDoc for documentation and therefore building frontend documentation can run in parallel to everything already described.

frontend-doc:

stage: documentation

needs:

- job: frontend-build

script:

- cd frontend

- node node_modules/jsdoc/jsdoc.js -c jsdoc.conf.json

artifacts:

paths:

- doc/

For various reasons we chose to build the frontend first and only then build the documentation in parallel to building the backend.

With everything ready, we can build our PDF documentation using wkhtmltopdf and native scrible PDF support:

pdf-doc:

stage: documentation

needs:

- job: backend-doc

- job: frontend-doc

- job: backend-build

- job: frontend-build

- job: user-doc

script:

- mkdir -p pdfdoc

- wkhtmltopdf doc/frontend/global.html pdfdoc/frontend.pdf

- scribble --pdf --dest pdfdoc --dest-name backend.pdf tools/render-docs.scrbl

- mv user-doc-cz.pdf pdfdoc/

artifacts:

paths:

- pdfdoc/

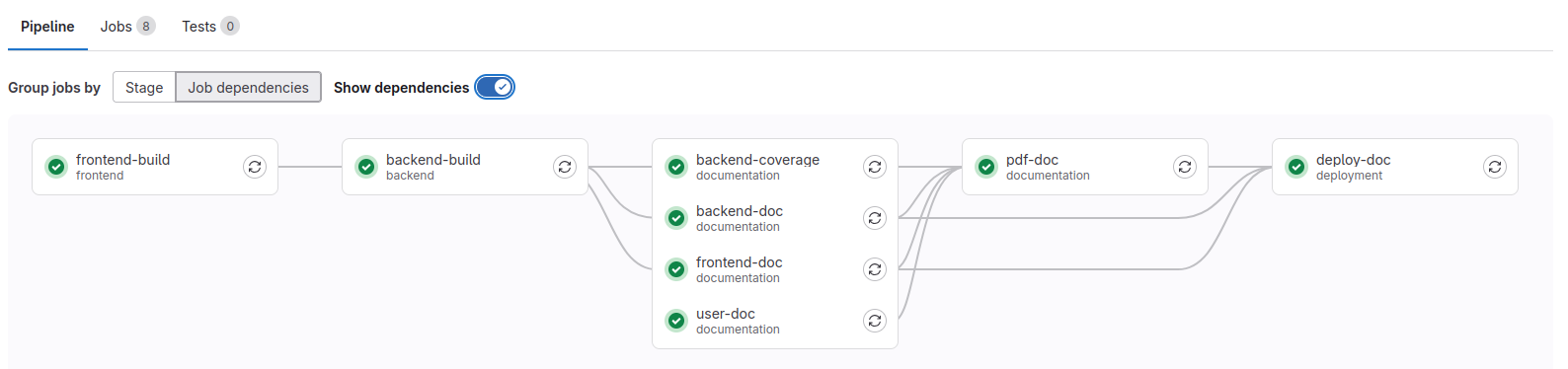

Everyone should get the idea by now. However the whole pipeline we use is slightly more complex in the end:

Hope you liked our venture into the realm of advanced CI/CD pipelines in GitLab and see ya next time!